File identification and metadata generation with ChatGPT

The problem #

Utilising the Internet Archive's Wayback CDX Server API, I discovered that the Internet Archive has accumulated approximately 20,000 digital documents related to ICAEW (PDFs, DOCs, and DOCXs etc.). Interestingly, some of these documents may no longer be available to us in our own holdings - so it could potentially be a valuable resource.

The primary challenge lies in discerning which documents are relevant to our needs. The filenames alone provide insufficient information for this determination, necessitating a review of each document to document essential details like the document's title, publication date, and authorship. Obviously doing this manually would take a very long time.

The solution #

I investigated whether ChatGPT could assist in extracting metadata from documents, and after some exploration, I discovered that it indeed can. Initially, I encountered challenges with ChatGPT's ability to discern title, author, and date information directly from document text — likely due to its focus on textual analysis, which overlooks valuable layout and typography cues. This led me to experiment with converting PDF documents into images and utilizing GPT-4 Vision for processing. The results of this approach were pretty good.

To automate this process, I wrote a Python script with the following capabilities:

- It iterates through a directory of files.

- Filetype identification is performed using python-magic because the downloads contain a lot of non-standard file extensions, i.e. a lot of files have been saved with a .ashx extension 🤦♂️

- For PDF files, it captures the first page as a screen image. Then, it sends this image to OpenAI's API, requesting extraction of the document's title, author, issue number, and publication date. The query specifies a desire for responses in JSON format, explicitly asking for each piece of information or an empty string if unavailable. The requested date format is YYYY-MM-DD.

- For non-PDF files, the script first converts them to PDF, then follows the same procedure as for PDF files.

- The script collects and outputs these metadata into a CSV file.

This method enhances traditional text analysis by incorporating visual cues from document layouts, significantly improving metadata identification accuracy.

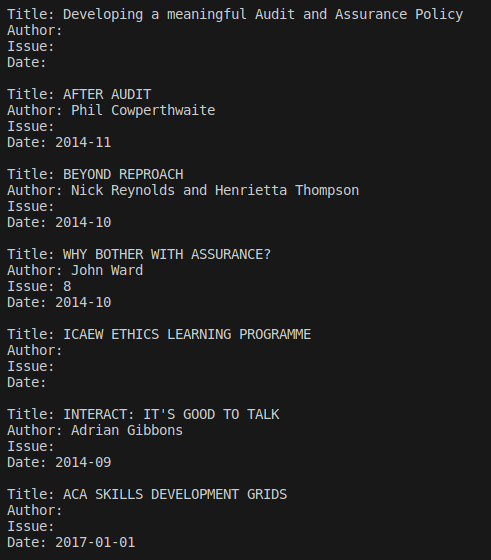

Below is an illustration of the script's output, showcasing its ability to accurately identify document metadata:

I have included the script in the Appendix section below, but I've let ChatGPT give a detailed breakdown of what the script is doing.

A breakdown of the process - written by ChatGPT 😊 #

This script is a complex tool designed for processing a batch of files, particularly focusing on PDFs and documents that can be converted to PDFs (e.g., Microsoft Word documents), to extract specific metadata from the first page of each document. The extracted information includes the document's title, author, issue number, and date, which is then saved in a CSV file. Here's a step-by-step breakdown of its functionality:

-

Load Environment Variables: It starts by loading environment variables from a specified .env file, which includes sensitive information such as the OPENAI_API_KEY needed for API requests.

-

CSV File Initialization: If not already present, it creates a CSV file with headers for storing the results of the document processing, including metadata and any conversion status or errors.

-

Document Processing Loop: The script iterates over files in a specified directory, limited to a set range (START to END) for batch processing. For each file, it checks whether the file is a PDF or a document type that can be converted to PDF (e.g., Word documents).

- If the file is a PDF, it processes the file directly.

- If the file is a convertible document type, it first converts the file to PDF using LibreOffice in headless mode (without a GUI), then processes the converted PDF file.

-

PDF Processing: For each PDF, the script converts the first page into an image at 200 DPI (dots per inch), then encodes this image in base64 format. This encoded image is sent to an OpenAI API (presumably using a model like GPT-4 or a custom model designed to extract information from images) with a request to extract the document's title, author, issue number, and date. The script expects the response in JSON format.

-

Response Handling and CSV Writing: The script parses the JSON response from the API, extracting the requested metadata. This information, along with the file name and whether the document was converted to PDF, is appended to the CSV file. In case of errors during processing or API response handling, error entries are also logged in the CSV.

-

Utility Functions: The script includes several utility functions for specific tasks:

- load_environment_variables: Loads environment variables from a file.

- encode_image: Encodes an image file to base64.

- create_csv_if_not_exists: Creates a CSV file with specified headers if it doesn't exist.

- process_pdf_file: Handles the processing of a PDF file, including conversion to image, API request, and CSV logging.

- make_openai_request: Sends a request to the OpenAI API with the encoded image and desired query.

- write_results_to_csv and write_error_to_csv: Handle writing successful responses and errors to the CSV file, respectively.

- is_pdf: Checks if a file is a PDF using the magic library.

- convert_to_pdf: Converts a file to PDF using LibreOffice in headless mode.

Overall, this script automates the process of extracting specific metadata from documents, utilizing an OpenAI API for image-based query responses, and logs the results for further analysis or archiving purposes.

Appendix #

import os

import csv

import json

import requests

import subprocess

import magic

import base64

from pathlib import Path, PurePath

from pdf2image import convert_from_path

def load_environment_variables(env_file):

with open(env_file) as f:

for line in f:

key, _, value = line.partition("=")

os.environ[key.strip()] = value.strip()

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

def create_csv_if_not_exists(csv_file_path, headers):

if not os.path.exists(csv_file_path):

with open(csv_file_path, mode='w', newline='') as file:

writer = csv.writer(file)

writer.writerow(headers)

def process_pdf_file(pdf_file, OPENAI_API_KEY, converted_to_pdf):

try:

images = convert_from_path(

str(pdf_file), first_page=1, last_page=1, dpi=200)

if images:

image_path = pdf_file.stem + '_first_page.png'

images[0].save(image_path, 'PNG')

base64_image = encode_image(image_path)

response = make_openai_request(base64_image, OPENAI_API_KEY)

os.remove(image_path) # Cleanup

if response:

write_results_to_csv(

csv_file_path, pdf_file.name, response, converted_to_pdf)

return

# If no images or response, write an error entry to CSV

write_error_to_csv(csv_file_path, pdf_file.name,

"No images or response")

except Exception as e:

print(f"An error occurred processing {pdf_file.name}: {e}")

write_error_to_csv(csv_file_path, pdf_file.name, str(e))

def make_openai_request(base64_image, OPENAI_API_KEY):

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {OPENAI_API_KEY}"

}

payload = {

"model": "gpt-4-vision-preview",

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": 'What is the title of this document? Who is the author? What is the issue number? What is the date? I would like the response to be in JSON format, for example: \{"title": data, "author": data, "issue": data, "date": data\}. If you are unable to find a match, set as an empty string. The dates should be in the form YYYY-MM-DD.'

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

"max_tokens": 300

}

try:

response = requests.post(

"https://api.openai.com/v1/chat/completions", headers=headers, json=payload)

response.raise_for_status() # This will raise an exception for HTTP error codes

return response.json()

except Exception as err:

print(f"An error occurred: {err}")

return None

def write_results_to_csv(csv_file_path, pdf_file_name, response_data, converted_to_pdf):

# Extracting the 'content' string from the dictionary

content = response_data['choices'][0]['message']['content']

# Converting the JSON string to a Python dictionary

content_dict = json.loads(content.strip('`json\n'))

# Extracting the required fields

title = content_dict['title']

author = content_dict['author']

issue = content_dict['issue']

date = content_dict['date']

# Printing the extracted information

print(f"Title: {title}")

print(f"Author: {author}")

print(f"Issue: {issue}")

print(f"Date: {date}\n")

try:

with open(csv_file_path, mode='a', newline='') as file:

writer = csv.writer(file)

# Adjust this based on how you parse the JSON response

writer.writerow([pdf_file_name, title, author,

issue, date, str(converted_to_pdf)])

except Exception as e:

print(f"Failed to write results for {pdf_file_name} to CSV: {e}")

def write_error_to_csv(csv_file_path, pdf_file_name, error_message):

try:

with open(csv_file_path, mode='a', newline='') as file:

writer = csv.writer(file)

writer.writerow([pdf_file_name, "Error", error_message, "", ""])

except Exception as e:

print(f"Failed to write error for {pdf_file_name} to CSV: {e}")

def is_pdf(file_path):

# Use python-magic to determine if the file is a PDF

mime = magic.Magic(mime=True)

mimetype = mime.from_file(str(file_path))

return mimetype == 'application/pdf'

def convert_to_pdf(input_path, output_dir):

try:

# Extract the base name of the input file and construct the output file name

base_name = os.path.basename(input_path)

file_name_without_ext = os.path.splitext(base_name)[0]

output_file_path = os.path.join(

output_dir, f"{file_name_without_ext}.pdf")

# Construct the LibreOffice command

cmd = [

'libreoffice', '--headless', '--convert-to', 'pdf',

'--outdir', output_dir, input_path

]

# Execute the command

subprocess.run(cmd, check=True)

# Return the path of the newly created PDF

return output_file_path

except subprocess.CalledProcessError as e:

print(f"Error during conversion: {e}")

return None

if __name__ == "__main__":

env_file = '.env'

files_dir = ''

csv_file_path = 'results.csv'

START = 0

END = 500

load_environment_variables(env_file)

OPENAI_API_KEY = os.getenv('OPENAI_API_KEY')

create_csv_if_not_exists(

csv_file_path, ['Filename', 'Title', 'Author', 'Issue', 'Date', 'Conversion to PDF', 'Error'])

for file_path in sorted(Path(files_dir).iterdir(), key=lambda p: p.name)[START:END]:

if is_pdf(file_path):

process_pdf_file(file_path, OPENAI_API_KEY, False)

else:

mime = magic.Magic(mime=True)

mime_type = mime.from_file(file_path)

if mime_type in ['application/msword', 'application/vnd.openxmlformats-officedocument.wordprocessingml.document']:

file_path = PurePath(convert_to_pdf(file_path, files_dir))

process_pdf_file(file_path, OPENAI_API_KEY, True)

os.remove(file_path)